What is a Neural Network, Hey, it’s Zara Dar. In this Post, I’ll be talking about neural networks. Before we dive into neural networks, let’s talk about machine learning in general. Machine learning is a branch of artificial intelligence, or AI, that focuses on building systems that can learn from and make decisions based on data. I’m sure you’re hearing AI way too often these days, and you’re right to think it’s becoming more of a marketing term. Some even argue that John McCarthy, who coined the term in the 1950s, intended it to be a marketing term to attract funding. Anyways, unlike traditional programing,

Traditional Programming vs. Machine Learning

where we explicitly define the rules and logic, machine learning enables computers to learn patterns and make predictions without being explicitly programed for specific tasks. Let’s illustrate this with a real world example. Spam email detection. Let’s say I receive an email and I want to determine whether it’s spam or not spam. With traditional programing, I would need to write specific rules. For example, if an email contains words like “lottery”, “free” or “winner”,

Example: Spam Email Detection

it’s classified as spam. The limitation here is that I would need to anticipate and encode every possible rule. If spammers change their wording, the program may fail to identify new spam emails. On the other hand, with machine learning, instead of writing specific rules, we feed the machine learning algorithm a large data set of emails, each labeled – in the case of supervised learning – by humans as spam or not spam. The algorithm learns patterns from this data that differentiate spam from non-spam, and during training,

the algorithm will try to build a model that minimizes the classification error, or more generally, something we call a loss function. In this way, the algorithm can adapt to new forms of spam without explicit reprograming. To illustrate this, if I were to plot the data with each email representing a point on a representative components, for example, x1 could be a measure of suspicious words, and x2 could be a measure of how generic the email is. These features or components

could be engineered or learned. My hope is that the emails in my data plot are something like this. Now, this is obviously an oversimplified illustration, but I think you get the general idea. The stars would represent the spam and the circles would be the non-spam. I could simply draw a hyperplane, or in this case just a line to separate or classify these emails. Remember when I said we want to build a model?

Types of Machine Learning

Supervised Learning

Well, this hyperplane is the current simple model that defines which email is spam or not. So if I receive a new email, it could be simply plotted here to see if it is spam or not, depending on where it’s located. With respect to the separating hyperplane. Since the data, or the emails in this case, used to train the model were labeled, classifying the emails goes under supervised learning, which is one of the three types of machine learning. The other two being unsupervised learning. So for example, clustering news articles into categories. And the third is reinforcement learning, such as training a car’s autopilot through an algorithm that rewards for desirable actions and penalizes for undesirable actions. If you want me to discuss more of these concepts in future Posts, please let me know in the comments. What is a neural network?

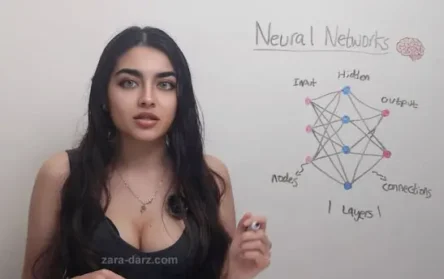

A neural network is a computational model developed around the mid 20th century, inspired by the way biological neurons in the human brain function. At its core, a neural network consists of layers of interconnected nodes, or neurons, that process data and generate outputs based on that data.

The network learns from input data by adjusting the connections, known as weights and biases, between these neurons to improve predictions or classifications over training iterations or time. The complexity and depth of these networks can vary depending on the problem they are designed to solve. The key components of the neural network are the nodes, the layers, and the connections. Neurons or nodes are the basic unit of a neural network. Neurons receive inputs, apply a mathematical operation, and pass the result on to the next layer.

Each neuron is connected to other neurons, forming a network. We also have layers which are broken down into three categories. The input layer, which is the first layer that receives the raw data input, such as numerical values, images, or text. Next we have the hidden layers, which are the intermediate layers where the data is transformed by the network. The number and size of these layers can vary depending on the network’s design. And finally we have the output layer,

Structure of a Neural Network

Neurons and Nodes

which is the final layer that produces the output of the network, such as a classification label or a predicted value. And finally, the third component of the neural networks are the connections or the weights and biases. Each connection between neurons has an associate weight, which determines the strength of the signal being passed. Biases are added to each neuron to shift the activation functions. Output. And this allows for more flexibility in the learning process. Mathematically, the operation within a neuron can be represented as follows.

Where wi represents the weights, xi is the input, and b is the bias, and f is the activation function that determines the neurons output. Let’s talk about f. First let’s look at the sum inside. None of the operations in X are non-linear. We only see linear operations. It’s just like ax + b where the xi’s are simply multiplied by the weights wi’s and added. That’s why we use activation functions. Activation functions like ,

the sigmoid function and the ReLU or the rectified linear unit, are commonly used to introduce non-linearity into the network, enabling it to learn complex patterns in the data. The sigmoid function is often used when the output needs to be interpreted as a probability, as its range is between 0 and 1. ReLU, on the other hand, introduces non-linearity while being computationally efficient, since it involves only a simple thresholding at zero. So if z is below zero, the neuron simply does not get activated. So maybe you can already see how these networks can represent complex functions by tuning which neurons get activated and which do not given a certain input. we’ve covered the basics of what neural networks are and how they’re structured. I understand that these concepts might seem a bit abstract right now, but in the next lecture I’ll introduce different types of neural networks and provide concrete examples to help make these ideas more clear and more practical.

Different Neural Network Architectures

Feedforward Neural Networks (FNN)

the purpose of this Post is to introduce you to some of the different types of neural network architectures if you didn’t know neural networks come in many forms or architectures and in fact new architectures are constantly being developed previously I introduced the basic idea of neural networks and discussed how they’re inspired by the neurons in our brains the question now is if all neurons networks use the same Core Concepts of neurons layers and weights,

why do we have so many different types the answer is that the type of data and specific tasks determine the architecture we want to employ you can very roughly think of it like choosing a vehicle if you are single and want to have fun you might go with something like a motorcycle but if you have a family an SUV might be the better fit in other words there’s usually a vehicle best suited for each purpose and similarly different neural network architectures are better suited for different tasks and types of data the fundamental building block of a

neural network is a neuron a neuron takes an input U and performs a mathematical operation to produce an output y this operation could be as simple as multiplying You by a constant or something more complex such as applying an active ation function , like re or rectified linear unit which we discussed in a previous Post to create a neural network we can stack neurons next to each other or in layers for instance.

we might have the output of the first neuron serve as the input of the second neuron creating a more complex function we can add multiple layers often called hidden layers with many neurons in each layer the information flows forward from the input layer through the hidden layers to the output layer this structure is called a feed forward neural network and it’s fully connected when every neuron in one layer is connected to every neuron in the next layer consider a simple example where we want a neural network to tell us if a photo contains a cat so we need to decide if our Network’s operations and number of neurons are sufficient after training to distinguish whether the photo has a cat a neural network that works well for image classification may not be the best choice for other tasks such as identifying spam emails and vice versa here’s a quick analogy in linear regression we have a predefined function y = ax + B during training you adjust a and b also known as the weights to best fit your data but you’re still limited by the linear form this is rough L

the same idea for neural networks where the architecture influences what kind of functions can be learned let’s now discuss some of the most common neural network architectures I’ll just briefly introduce the general structure of each and feel free to let me know in the comments if you’d like me to dive deeper into any of these feedforward neural networks are the most straightforward type of neural network and it’s what we’ve primarily been discussing so far data flows for forward from the input layer through the hidden layers to the output layer,

some example use cases of feed forward neural networks include simple classifications such as determining if an email is Spam or not and basic regressions such as predicting house prices using features like the square footage feed foral neuron networks are generally easy to implement but they are not always suitable for data with strong spatial or temporal relationships such as image classification or sequence modeling convolutional neural networks or cnns are commonly used for image or grid-like data.

Convolutional Neural Networks (CNN)

they rely on convolutional layers often combined with pooling layers and fully connected layers the idea is to use small matrices called filters or kernels which slide across the image to process patches of it for example a 2×2 pixel filter moves across the image to to detect patterns and features this allows us to detect features like edges textures and other patterns note that the weights of these filters are learned during training some use cases for CNN include image classification like recognizing if an image contains a cat or not they are also great at handling translational invariance meaning it doesn’t matter where the cat is in the photo a cat is a cat cnns are also used for detection for example self-driving cars use cnns to identify pedestrians and other vehicles additionally cnns are used for image segmentation such as identifying specific regions or organs in medical images CNN are very effective for capturing spatial patterns however they typically require large amounts of data to train while CNN’s handle spatial patterns runs or recurrent neural networks are designed to handle sequential data such as audio signals or time series data their use has declined recently with

Recurrent Neural Networks (RNN)

the rise of the Transformer architecture which we’ll discuss next but it’s still important to understand the idea behind runs unlike strictly feedforward networks run’s introduce feedback loops where the output from one neuron or layer can feed back into previous neurons or layers this gives the network a sort of memory a popular variant of RNs n is the long short-term memory or lstm network which selectively decides what information to

keep at each time step enabling learning over longer time spans some use cases of RNN include language modeling such as predicting the next word in a sentence machine translation such as translating sentences from one language to another and handling any sequential data such as stock prices and weather forecasting trans formers are a newer architecture that use self attention to process sequences in parallel unlike rnn’s they have become the state-of-the-art for natural language processing or NLP and if you didn’t know the T in chat GPT stands for Transformer in addition to NLP Transformers are also used in computer vision Transformers were first introduced,

in the 2017 paper by Google researchers in the paper attempt attorn is all you need they were initially designed for language translation but their key Innovation the self attention enables models to understand language grammar and context effectively for example the two sentences the stealing fans were spinning so fast and she has a lot of fans on social media in both sentences the word fans appears but it has different meanings depending on the context it a Transformer can distinguish between the meaning of the word fans through self-attention since it considers the entire sentence contextually even as the sentence or context length increases significantly there’s obviously way more to Transformers than this simple introduction and I’m actually still learning about it myself if you would like me to dive more into the Transformer architecture please let me know it’s also worth mentioning that in my field engineering there is a a growing demand for physics informed neural networks or pins which integrate physics-based principles directly

into their architecture and training process their architecture embeds prior knowledge of physical systems and carefully designed loss functions that enforce adherence to physical laws and as I mentioned this is just a very general overview of some of the different neural network architectures that exist and are commonly used I’ll be linking a couple of resources for you in the description if you would like to learn more about any of these and in the next Post I’ll be doing a simple coding exercise to demonstrate neural networks in action I will focus on feed forward and convolutional neural network architectures as those are generally the easiest to learn and implement

Leave a Reply